Example With Large-Scale Structure (LSS)¶

This example shows how pydftools deals with LSS. It mimics example

(2) of dftools::dfexample.

In [10]:

# Import relevant libraries

%matplotlib inline

import pydftools as df

from scipy.special import erf

import numpy as np

import time

import matplotlib as mpl

import matplotlib.pyplot as plt

mpl.rcParams['figure.dpi'] = 120 # Make figures a little bigger in the notebook

from IPython.display import display, Markdown

In [2]:

# Survey parameters

sigma = 0.3

seed = 1

Let us create a selection function which involves LSS. We define a dew

functions, namely, f, which is the selection function which gives

the fraction of objects observed at any given combination of

\((x,r)\), dVdr, which is the radial derivative of the volume

(typically proportional to \(r^2\)), and g, which is the

relative over-/under- abundance of objects at distance \(r\) due to

cosmic fluctuations:

In [3]:

dmax = lambda x : 1e-3/np.sqrt(10) * np.sqrt(10**x)

f = lambda x, r : erf((dmax(x) - r)/dmax(x)*20)*0.5 + 0.5

dVdr = lambda r : 0.2 * r**2

g = lambda r : 1 + 0.9*np.sin((r/100)**0.6*2*np.pi)

Now create our selection function object:

In [11]:

selection = df.selection.SelectionRdep(xmin = 5, xmax = 13, rmin = 0, rmax = 100, f=f, dvdr=dVdr, g=g)

Warning: xmin returns Veff(xmin)=0, setting xmin, xmax to 5.45645645646, 13.0

Use a Schechter distribution function:

In [13]:

model = df.model.Schechter()

p_true = model.p0

In [14]:

data, selection, model, other = df.mockdata(seed = seed, sigma = sigma, model=model, selection=selection, verbose=True)

Number of sources in the mock survey (expected): 561.057

Number of sources in the mock survey (selected): 561

Fit mock daa without any bias correction:

In [15]:

selection_without_lss = df.selection.SelectionRdep(xmin = 5, xmax = 13, rmin = 0, rmax = 100, f=f, dvdr=dVdr)

survey1 = df.DFFit(data = data, selection = selection_without_lss, ignore_uncertainties=True)

Warning: xmin returns Veff(xmin)=0, setting xmin, xmax to 5.45645645646, 13.0

Fit mock data while correcting for observational errors (Eddington bias):

In [16]:

survey2 = df.DFFit(data = data, selection = selection_without_lss)

Fit mock data while correcting observational errors and LSS. We create a new (identical) Selection object, both to keep them conceptually different, and also because the object is mutable, and is changed when estimating the LSS function. If we use the same Selection object, the previous objects will be modified when fitting the following object.

In [17]:

selection_est_lss = df.selection.SelectionRdep(xmin = 5, xmax = 13, rmin = 0, rmax = 100, f=f, dvdr=dVdr)

survey3 = df.DFFit(data = data, selection = selection_est_lss, correct_lss_bias = True, lss_weight= lambda x : 10**x)

Warning: xmin returns Veff(xmin)=0, setting xmin, xmax to 5.45645645646, 13.0

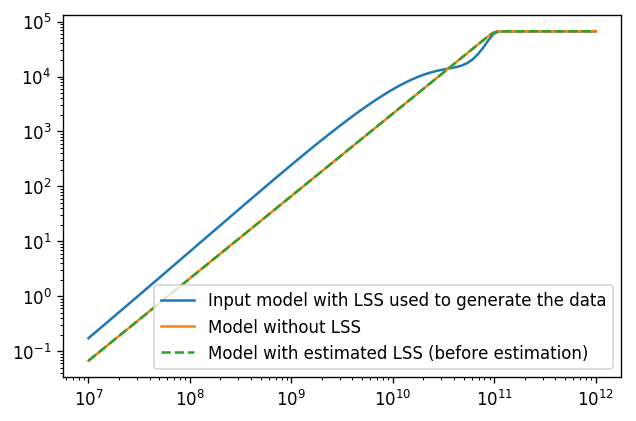

Recall that the fitting is not actually performed until the fit

attribute is accessed. Let’s plot the effective volume functions for

each of our models before fitting:

In [18]:

x = np.linspace(7, 12, 100)

plt.plot(10**x, selection.Veff(x), label="Input model with LSS used to generate the data")

plt.plot(10**x, survey1.selection.Veff(x), label="Model without LSS")

plt.plot(10**x, survey3.selection.Veff(x), ls= '--', label="Model with estimated LSS (before estimation)")

plt.xscale('log')

plt.yscale('log')

plt.legend()

Out[18]:

<matplotlib.legend.Legend at 0x7f7940477c88>

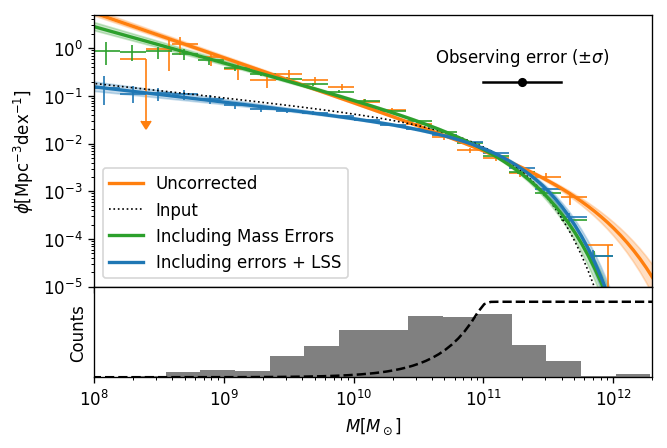

Now let’s perform the fit and plot the fitted mass functions:

In [23]:

fig, ax = df.mfplot(survey1, fit_label="Uncorrected", p_true=p_true, xlim=(1e8,2e12),ylim=(1e-5,5),nbins=20,bin_xmin=8,bin_xmax=12,col_fit='C1',col_data='C1',show_data_histogram = True, show_bias_correction = False, show_posterior_data=False)

fig, ax = df.mfplot(survey2, fit_label="Including Mass Errors", nbins=20, bin_xmin=8,bin_xmax=12,col_fit='C2',col_posterior='C2',show_input_data=False,show_data_histogram = False, fig=fig, ax0=ax[0],ax1=ax[1], show_bias_correction=False)

fig, ax = df.mfplot(survey3, fit_label="Including errors + LSS", nbins=20, bin_xmin=8,bin_xmax=12,col_fit='C0',col_posterior='C0',show_input_data=False,show_data_histogram = False, fig=fig, ax0=ax[0],ax1=ax[1], show_bias_correction=False)

ax[0].text(10**11.3, 5e-1, r"Observing error ($\pm \sigma$)",horizontalalignment='center')

ax[0].plot([10**(11.3 -sigma), 10**(11.3+sigma)], [2e-1, 2e-1], color='k')

ax[0].scatter([10**11.3], [2e-1], color='k', s=20)

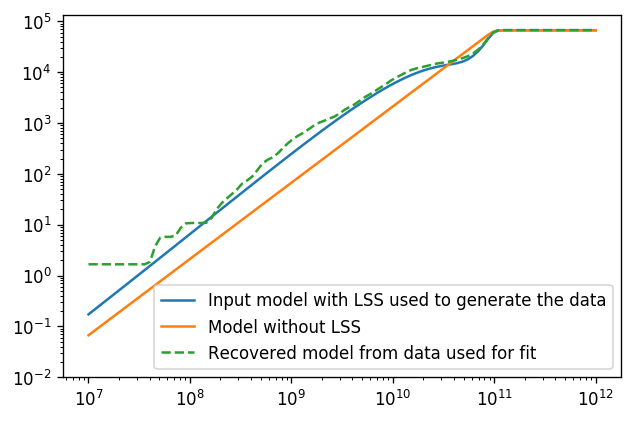

And again, have a look at the effective volumes (post-fitting):

In [12]:

plt.plot(10**x, selection.Veff(x), label="Input model with LSS used to generate the data")

plt.plot(10**x, survey1.selection.Veff(x), label="Model without LSS")

plt.plot(10**x, survey3.selection.Veff(x), ls= '--', label="Recovered model from data used for fit")

plt.xscale('log')

plt.yscale('log')

plt.ylim(1e-2,)

plt.legend()

Out[12]:

<matplotlib.legend.Legend at 0x7fbbd888a4e0>